Building RAG-based LLM Applications with DataStax and Fiddler

Enterprises are increasingly choosing to launch retrieval augmented generation (RAG)-based LLM applications, as it’s an efficient and cost-effective deployment method. RAG enables AI teams to build applications on top of existing open-source LLMs or LLMs provided by the likes of OpenAI, Cohere, or Anthropic. Additionally, with RAG, enterprises can introduce time-sensitive and private information not possible with foundation models alone.

We’re excited to announce a partnership that enables enterprises and startups to put accurate, RAG applications in production more quickly with DataStax Astra DB and Fiddler’s AI Observability platform. Before discussing the partnership in further detail, we will first cover the basics of RAG and RAG-based LLMs.

[h2] What is retrieval augmented generation (RAG)?

RAG) represents a pivotal advancement in artificial intelligence, merging the capabilities of large language models (LLMs) with the dynamic retrieval of external data to enrich and inform the generation process. This methodology enables the production of responses that are not only contextually relevant but also deeply informed by up-to-date and expansive datasets. By leveraging knowledge available beyond its original training parameters, RAG broadens the scope and accuracy of generated content, making it particularly effective for complex query answering and content creation tasks.

The essence of RAG lies in its ability to conduct on-the-fly searches across extensive databases or the internet to pull in the most relevant information needed to answer a given question or address a specific topic. This process allows RAG to supplement the fixed knowledge encapsulated within a model at the time of its training with fresh, specific data. As a result, RAG-based systems can offer more precise, detailed, and contextually rich outputs compared to their traditional counterparts, which may only draw upon a static knowledge base. This dynamic interaction between retrieval and generation marks a significant leap forward in making AI interactions more meaningful, accurate, and informative.

Want to learn more about retrieval augmented generation? Check out our comprehensive guide on RAG.

What are RAG LLM applications?

RAG-based LLM applications involve utilizing large language models enhanced by external data retrieval for various tasks. These applications range from advanced search systems, personalized content recommendations, and customer support chatbots to academic research assistants. They leverage RAG's ability to dynamically incorporate relevant, up-to-date information from external sources into the model's response generation process, thereby providing more accurate, contextually aware, and informative outputs across different domains.

How does RAG work?

The RAG framework operates by integrating the predictive power of LLMs with an external information retrieval process. Initially, when a query is received, RAG identifies key concepts within the question and uses these to fetch relevant data from a vast repository of information. Once retrieved, this data is fed back into the language model, enriching its context and enabling it to generate responses that are accurate and highly tailored to the specific query at hand. This dual-process system ensures that responses are informed by the most current and comprehensive data available, significantly enhancing the model's utility and effectiveness across various applications.

Why is RAG important?

The importance of RAG extends significantly beyond just accuracy and knowledge expansion. RAG is pivotal for several reasons:

-

Enables contextual understanding

By integrating current information, RAG provides responses that are contextually aware, enhancing user interaction.

-

Supports complex queries

It excels in handling complex, nuanced questions that require deep understanding and up-to-date information.

-

Facilitates personalization

RAG can tailor information retrieval to individual user preferences or historical interactions, offering personalized responses.

-

Improves efficiency

It reduces the computational load on LLMs by retrieving only relevant information, making the process faster and more resource-efficient.

-

Enhances learning and adaptation

Over time, RAG systems can improve their query handling and information retrieval techniques, leading to smarter, more efficient operations.

A simple recipe for deploying RAG-based LLM applications

What’s been so surprising about the proliferation of LLM-based applications over the past year is how powerful they are proving to be for a variety of knowledge reasoning tasks, while, at the same time, proving extremely simple architecturally. The benefits of RAG-based LLM applications have been well-understood for some time now.

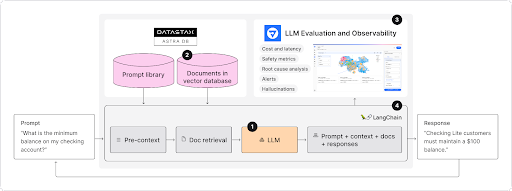

To build these “reasoning applications” only requires a few key ingredients:

- A LLM foundation model

- Documents stored in a vector database for retrieval

- An observability layer to tune the system, detect issues, and ensure proper performance

- And LangChain or a LlamaIndex toolkit to orchestrate the workflow and data movement

Yet, as with any recipe, the final product is only as good as the quality of the ingredients we choose.

The best-of-breed RAG ingredients

DataStax Astra DB

Astra DB is a Database-as-a-Service (DBaaS) that enables vector search and gives you the real-time vector and non-vector data to quickly build accurate generative AI applications and deploy them in production. Built on Apache Cassandra®, Astra DB adds real-time vector capabilities that can scale to billions of vectors and embeddings; as such, it’s a critical component in a GenAI application architecture. Real-time data reads, writes, and availability are critical to prevent AI hallucinations. As a serverless, distributed database, Astra DB supports replication over a wide geographic area, supporting extremely high availability. When ease-of-use and relevance at scale matter, Astra DB is the vector database of choice.

The Fiddler AI Observability Platform

The Fiddler AI Observability platform helps customers address the concerns surrounding generative AI. Whether AI teams are launching AI applications using open source, in-house-built LLMs, or closed LLMs provided by OpenAI, Anthropic, or Cohere, Fiddler equips users across the organization with an end-to-end LLMOps experience, from pre-production to production. With Fiddler, users can validate, monitor, analyze, and improve RAG applications. Fiddler offers many out-of-the-box enrichments that produce metrics to identify safety and privacy issues like toxicity and PII-leakage as well as correctness metrics like faithfulness and hallucinations.

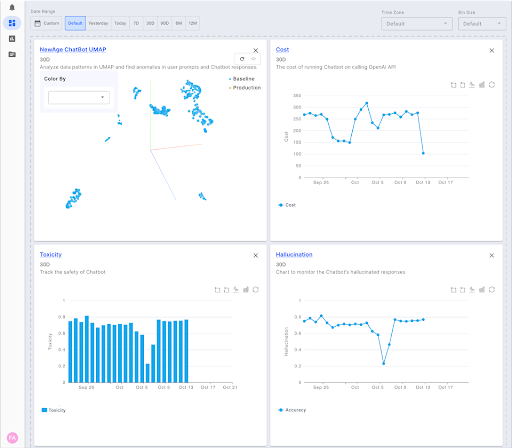

Analyze unstructured data on the 3D UMAP to identify data patterns and problematic prompts and responses

Why DataStax and Fiddler AI together?

DataStax and Fiddler AI together can provide enterprises and startups with monitoring capabilities to help them meet safety, accuracy and control requirements for putting RAG applications into production with the following:

- Enterprise-level requirements Cost, data privacy, and lack of control will still push enterprises to host their own data in a vector database.

- Meeting evolving complexities in LLMs Building with LLMs is no longer just a simple LLM call and getting back a text completion. These LLM APIs will now involve complex components like retrievers, threads, prompt chains, and access to tools—all of which need to be logged and monitored.

- LLM deployment method selection Prompt engineering, RAG, and fine-tuning can all be leveraged, but which one to use and when to use it depends on the task at hand. Startups or enterprises might take different approaches based on whether the LLM application they are building is internal or external. What is the risk vs reward tradeoff?

- Continuous LLM evaluation and monitoring Lastly, evaluation is still pretty hard! Regardless of how you use and apply LLMs, it won’t matter much if you are not consistently evaluating. With many changes on the horizon, customers should always be keeping close tabs on their LLM after they launch their AI application.

In short, getting started with RAG applications can be done in minutes. However, as the enterprise consumers of these applications demand more accuracy, safety, and transparency from these business-critical applications, enterprises will naturally gravitate toward the stack that provides the most control and deep feature set required.

Fiddler’s AI chatbot use case

Enterprises deploy LLM applications to innovate, obtain competitive advantage, generate new revenue, and enhance customer satisfaction. Fiddler built an AI chatbot for their documentation to help improve the customer experience of the Fiddler AI Observability platform. The chatbot offers tips and best practices for using Fiddler to monitor predictive and generative models..

Fiddler chose Astra DB for their chatbot’s vector database and were able to quickly set up an environment that had immediate access to multiple API endpoints. Using Astra’s Python libraries, Fiddler stored prompt history along with the embeddings for the documents in their data set. After publishing the chatbot conversations to the Fiddler platform, chatbot performance could be analyzed along with multiple key metrics, including cost, hallucinations, correctness, toxicity, data drift, and more.

The Fiddler platform offers out-of-the-box dashboards that use multiple visualizations to track the chatbot performance over time under different load scenarios and could compare responses with different cohorts of users. The Fiddler platform also enables the chatbot operators to conduct root-cause analysis when issues are detected.

Monitor, report and collaborate with technical and business teams using dashboards and charts

You can learn more about Fiddler’s experience of developing this chatbot at the recent AI Forward 2023 Summit session Chat on Chatbots: Tips and Tricks.

Getting started with retrieval-augmented generation with DataStax

Astra DB Vector offers an unparalleled solution for developing AI applications on real-time data, merging NoSQL database functionality with streaming features. Start a free trial today on the industry-leading and most expandable vector database.

In our next post in this series, we’ll provide a technical deep dive into the integration of this RAG stack, including code examples and more. Meanwhile, try Astra DB for free.