Self-managed cloud deployments and on-premises infrastructure for AI software solutions remain a key part of enterprise architecture. AI inference costs are coming down as NVIDIA continues to release new GPU architectures such as NVIDIA Blackwell Ultra that scale compute and inference performance to boost efficiency. AI factory offerings from companies like Dell and IBM are designed to be one-stop shops for on-prem AI workloads, and increase accessibility of optimized AI hardware to companies. This highlights the need for easy-to-use AI software platforms that can unlock the value of that AI hardware to enterprise developers. That’s where the DataStax AI Platform, built with NVIDIA AI, comes in.

Why self-managed and on-premises infrastructure?

Self-managed in the cloud and on-premises infrastructure remains a popular and vital option for many enterprises. Enterprises may choose to adopt self-managed solutions for a variety of reasons, but common ones include security, regulatory and data sovereignty obligations, latency requirements, and multi-cloud or hybrid deployments.

AI applications and systems also bring new reasons to run software and models in company-owned and controlled environments, such as the cost of third-party AI services, the ability to train and fine-tune models to more specific requirements, and agentic integration with existing technologies.

Self-managed DataStax AI Platform, built with NVIDIA AI

DataStax AI Platform, built with NVIDIA AI, offers a complete solution for rapidly prototyping, designing, deploying, and scaling AI applications. With support for the latest NVIDIA Blackwell GPUs, including NVIDIA RTX PRO Servers for universal enterprise workloads and advanced AI systems such as the NVIDIA B200 (both supported in the new NVIDIA Enterprise AI Factory validated reference design), DataStax AI enables enterprises to get the most out of their infrastructure investments.

Several key challenges exist in building AI applications in self-managed environments:

-

All components of the application need to run in the self-managed environment

-

Software needs to be deployed and operationalized easily

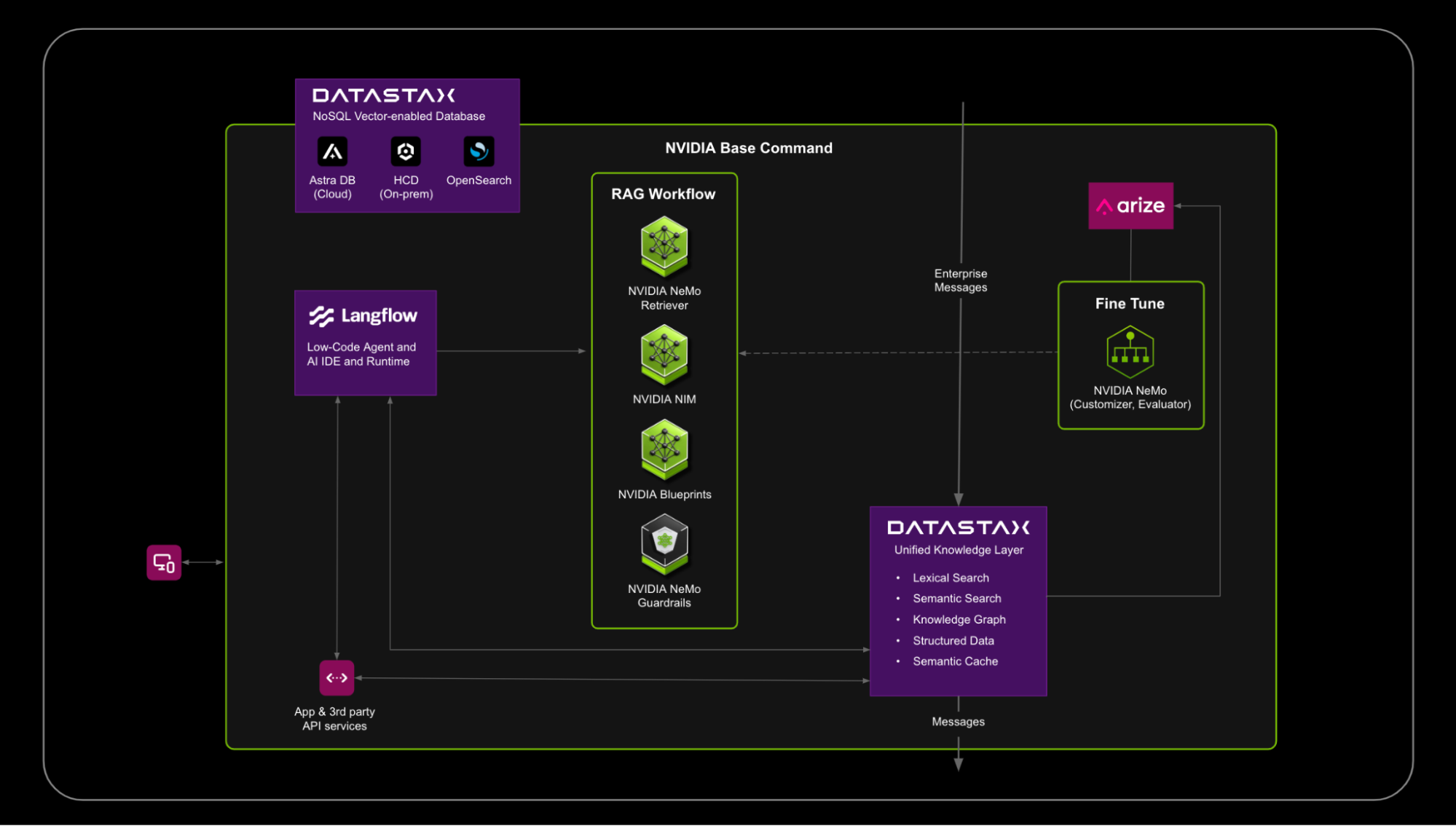

DataStax is working with NVIDIA and Arize to bring a complete AI platform to enterprises for self-managed infrastructure, including:

-

The low-code development and production run-time platform Langflow for rapid development and deployment of AI agents and workflows

-

The Arize observability and evaluation platform to improve accuracy in AI apps

-

Data storage and retrieval with Hyper-Converged Database (HCD) for real-time enterprise workloads at any scale and OpenSearch for search and analytics

-

NVIDIA NeMo services and NVIDIA NIM for

-

NVIDIA NeMo Retriever for unstructured data ingestion, embedding, and reranking models

-

NVIDIA NIM for highly performant LLMs and SLMs

-

NVIDIA NeMo Guardrails to protect your AI systems

-

The NVDIA NeMo stack for model fine-tuning including NeMo Curator, NeMo Customizer, and NeMo Evaluator

Further, DataStax helps address deployment and operationalizing these systems with tools to manage CPU and GPU resources running on Kubernetes, including common cloud provider options like Amazon EKS, Google Kubernetes Engine (GKE), Azure Kubernetes Service (AKS) as well as on-premises options like Red Hat OpenShift.

Use case: Agents and RAG

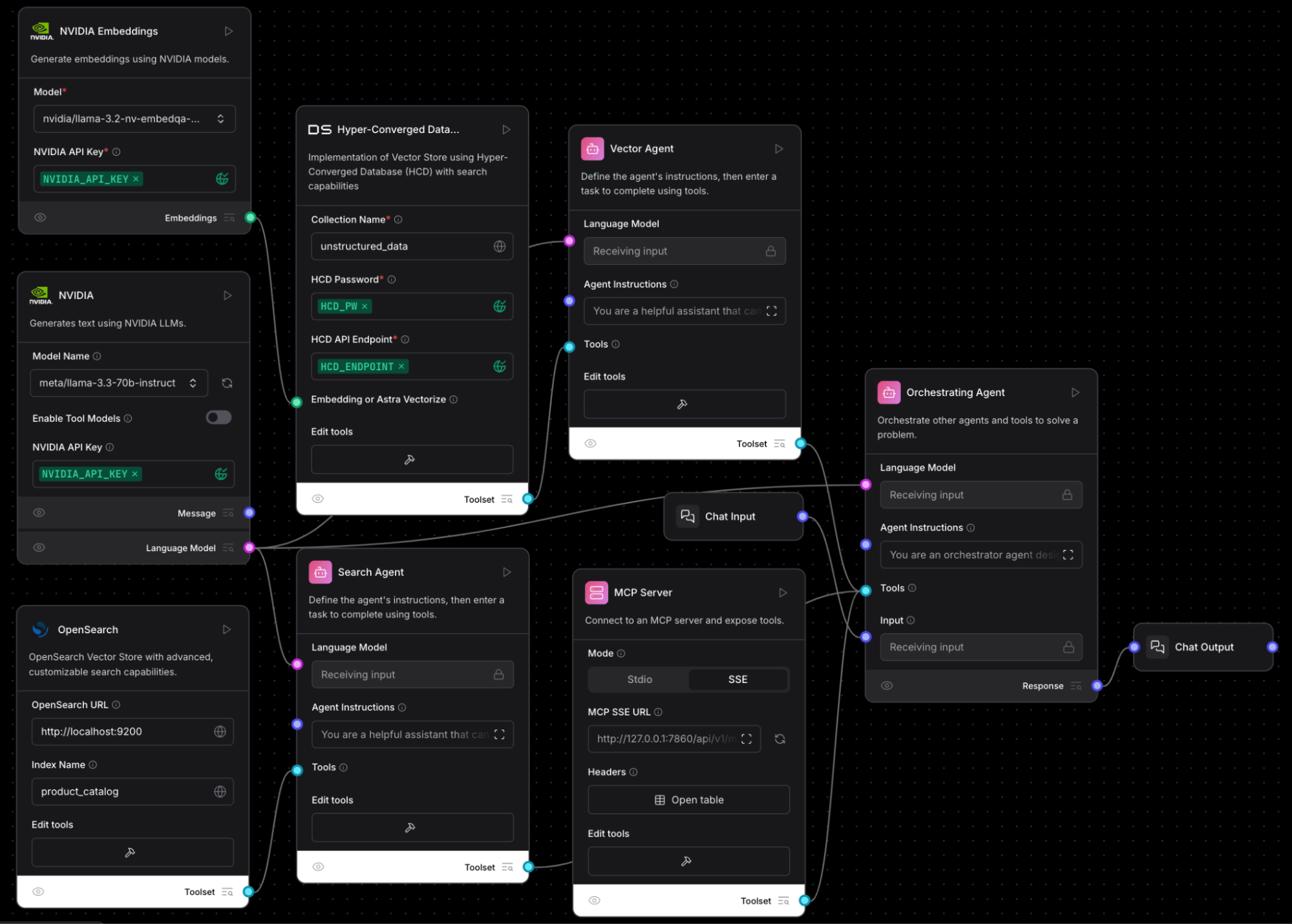

How do DataStax customers use the AI platform in their systems? One common pattern we see are multi-agent systems for RAG. Using Langflow, enterprises build agents that know how to talk to different data systems to find both structured and unstructured data context to solve problems.

Enterprises can also leverage common standardization protocols like Model Context Protocol (MCP) to connect to different systems.

The above image shows an example application built in Langflow that has an Orchestrating Agent that coordinates two subagents and one tool to answer questions. The first sub-agent is a vector search agent.

Unstructured PDF data has been loaded to HCD using NVIDIA NeMo Retriever extraction to process the PDFs and NVIDIA NeMo Retriever embedding NIM to generate the vectors.

The second agent understands how to talk to OpenSearch and retrieve enterprise search data.

Finally, there’s an MCP server that retrieves information from an internal system. Langflow enables enterprises to build this entire system in minutes, test behavior in the interactive playground, and then run in production with the Langflow runtime that exposes a RESTful API service for executing flows.

Additionally, the Langflow runtime is also exposed as an MCP service and can be used to integrate Langflow into AI applications.

Use case: Continuously improve AI systems with fine tuning and model training

Enterprises commonly require fine-tuned models as they enable them to tailor pre-trained models to specific business needs and datasets. This customization improves accuracy and relevance, helping remove a key blocker to getting apps production ready.

Additionally, fine-tuning is often used in distillation to better utilize GPU resources by achieving the same accuracy with smaller models. This is particularly important in self-managed environments where the enterprise is paying for a fixed compute capacity rather than on a per-token basis with cloud services. Scaling up inference workloads for higher throughput enables enterprises to reduce their total cost of ownership for AI on self-managed hardware.

Creating a process of continuous AI system improvement is key for enterprises to be successful with AI. DataStax AI Platform, built with NVIDIA AI, provides all the components necessary for this from the data stores, to data collection and monitoring with Arize, Langflow to orchestrate the training jobs, and then seamless deployment of fine-tuned models into existing flows in Langflow for use with agents.

Learn more about the building blocks to get you from zero to production with agents and GenAI applications in the AI Learning Center for Tech Leaders.