Agents are getting a lot of attention as the next major evolution within generative AI applications. Teams are eager to build complex agents that can perform intricate tasks and even make decisions on a user's behalf.

To enable this, many teams are looking at agentic workflows—networks of specialized agents that can break down complex tasks into subtasks and coordinate a final result. While this might sound complex initially, agentic workflows are easy to build and deploy—if you have the right tools.

Here, we'll look at what agentic workflows are, why (and when) you need them, and how to get started building and deploying them easily today.

What are agentic workflows?

Agentic workflows are networks of autonomous GenAI agents that perform complex tasks with minimal human intervention, making decisions and taking actions on a user's behalf.

An agent is autonomous code that can perform an action. But there’s only so much you can do in one agent before it becomes too complex, unwieldy, and over-generalized.

Agentic AI workflows take the notion of one step further by harnessing the power of large language Models (LLMs) themselves to assist in breaking down work, parceling out subtasks to agents that specialize in answering certain queries. The workflow can implement complex processes, such as:

- Choosing which external tools to call to generate a more accurate answer

- Selecting different strategies based on the nature of a question

- Implementing verification processes to assess the quality of other agents’ answers

- Handling failover gracefully (e.g., using another service if an API is currently unresponsive)

Built well, agentic workflows can answer users’ questions with greater accuracy, in less time. Creating an agentic workflow architecture also fosters the creation of many specialized, independent agents, which enables quickly composing new GenAI applications from pre-built components.

How agents in agentic workflows work

Think of agentic AI as the self-driving car of AI agents - an intelligent and flexible AI that can adapt its approach to different user queries and changing circumstances.

For example, an agent might kick off a workflow by asking an LLM to break a complex question—such as "Can you take these receipts and file an expense report for me?"—into subtasks. It might then call separate agents to complete the task: one to scan and convert receipts into text, one to compile an expense report, and another to apply and enforce corporate rules around expense filings. Each separate agent will make its own LLM calls, supplemented with context obtained using retrieval-augmented generation (RAG), to perform its narrow task.

The workflow might contain agents that specialize in decision-making. These agents could circumvent humans or loop them in when required based on confidence metrics. For example, a system might automatically approve an expense report based on the content and the user's expense approval history. Conversely, it might flag a report for human review if it suspects a mistake or even fraud, automatically applying a fraud score.

Agentic workflows can have different architectures and flows. They might process requests in a sequence, use a hierarchy, implement an evaluator-optimizer approach, or use a combination of these to ensure greater accuracy and quality of the final result.

Benefits of agentic workflows

Building an agentic workflow is essentially taking a componentized, modular approach to GenAI agents. As a result, it brings many of the same benefits we need in modular software engineering approaches, such as microservices:

Greater accuracy - Agentic workflows can often yield better results because each agent focuses on perfecting a specific task. They can also enforce quality through implementing validators and evaluators, like running a spelling and grammar correction agent on an automatically-generated resume, or checking that the output meets certain stylistic conventions or restrictions.

Better performance - Agentic workflows enhance performance by selecting different strategies based on the complexity and context of a user's questions. That ensures that the appropriate, most cost-effective amount of compute power is used for each request.

For example, for a simple request ("show me the current stock price for Company X with an explanation for today's fluctuation"), it might retrieve the answer quickly by calling a single stock ticker agent. Meanwhile, a request to find and book flights and hotels for the optimal Japan trip during cherry blossom season could involve multiple specialized agents, evaluators, and tools.

Better reusability - Rolling too much logic into a single agent prevents other GenAI apps from leveraging its functionality. By breaking your agent architecture into composable parts, new apps can leverage agents from across the company, reducing the time required to ship a new solution to production.

Langflow: Making agentic workflows easy

Treating agents as reusable components means we can stitch them together easily, not just in programming languages, but in visual builder tools, where we can see firsthand how requests flow through the system. Such tools enable building new GenAI apps rapidly with no-code or low-code approaches, increasing the number of developers who can contribute to building new GenAI-powered solutions.

Langflow is one such tool, built on top of the LangChain composable AI framework. Using Langflow, developers can construct their agentic workflows through a drag-and-drop designer, adding support for features such as RAG, chat memory, parallelization, planning, and other agent architecture features with a few clicks. You can run and test your apps directly from the IDE, further decreasing the time required to perfect your workflows.

Langflow has gone all-in on agents and multi-agent architecture. Its latest release, Langflow 1.1, further implements the project's guiding principle that every component in your GenAI architecture should be agentic - i.e., it should be callable from other agents in an agentic workflow.

To enable this, Langflow 1.1 added several new capabilities:

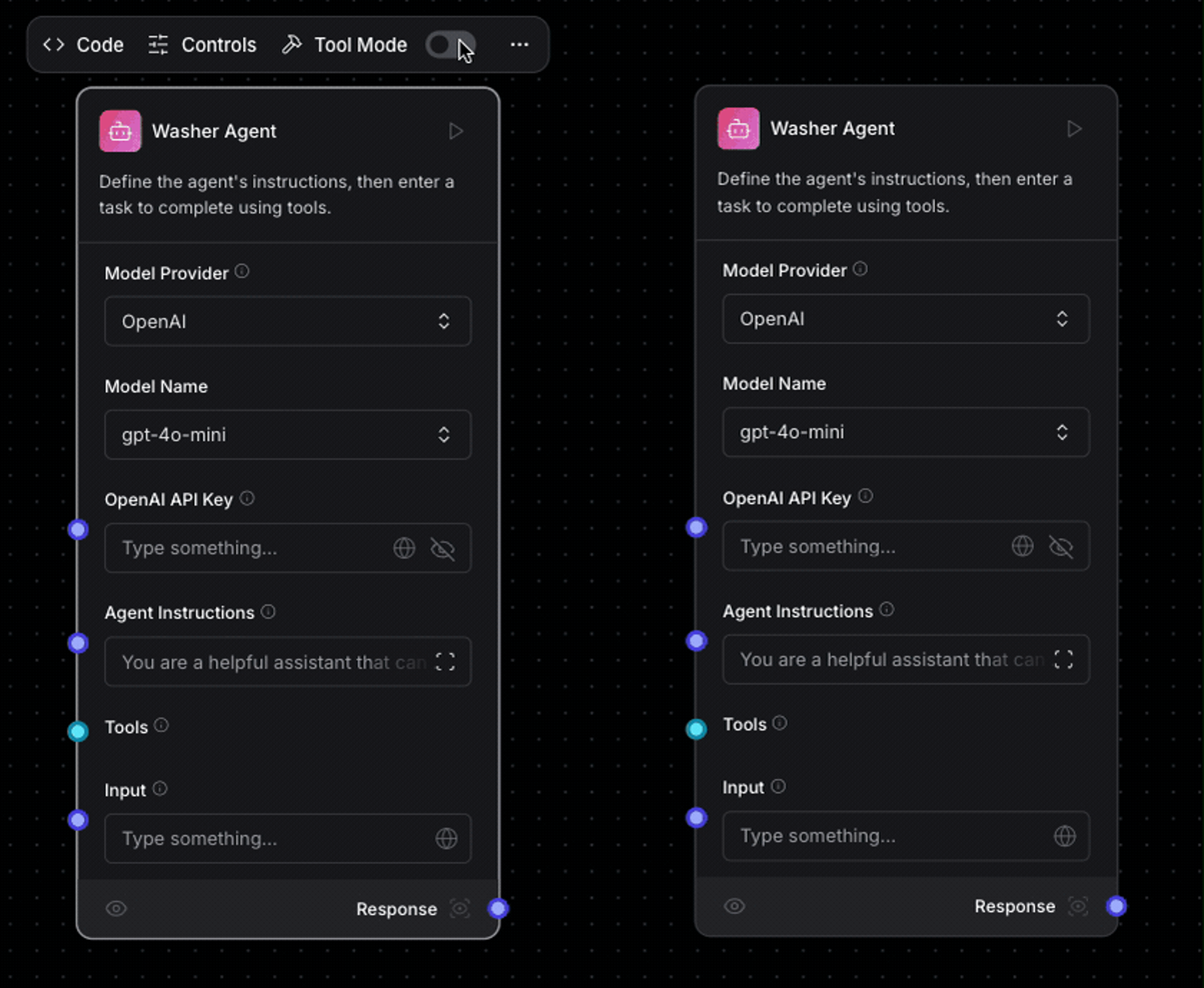

Tool mode

In GenAI parlance, a tool is a component that reaches out to a callable external resource (such as an external API, or command line tool) that returns information to the agent. With Langflow, you can turn any tool into a callable component from an agent by enabling tool mode on one or more properties. This enables connecting the tool's output to the agent's tool input.

In the Langflow IDE, you can turn on a tool by switching the tool mode switch for a given property. Your agent itself can enable tool mode on one or more properties, meaning it can itself be called by other agents as a tool.

Conversation sessions

Memory is an architectural component your GenAI app can use to preserve context across requests. For example, you can store the past x messages between a user and an agent so that the agent can draw on that information to provide better answers (and prevent repeating itself).

Langflow supports a chat memory component via LangChain that can store the past 100 messages. Adding this is as simple as dragging the component onto the canvas, wiring up a vector database, and connecting it to your agent.

Editable messages

One challenge with debugging GenAI apps is that LLMs are black boxes. We often have zero visibility into how they reached a given decision. That's frustrating for developers, as it means they can't just debug the source code to figure out why an LLM hallucinated an answer to their query about your product line.

Tools for testing GenAI apps can at least help close this gap by enabling rapid testing of changes so engineers can get closer to a proper answer more quickly. In Langflow, with editable messages, you can go back and change the inputs used in previous steps while testing your agentic workflow.

Building an agentic workflow with DataStax Langflow

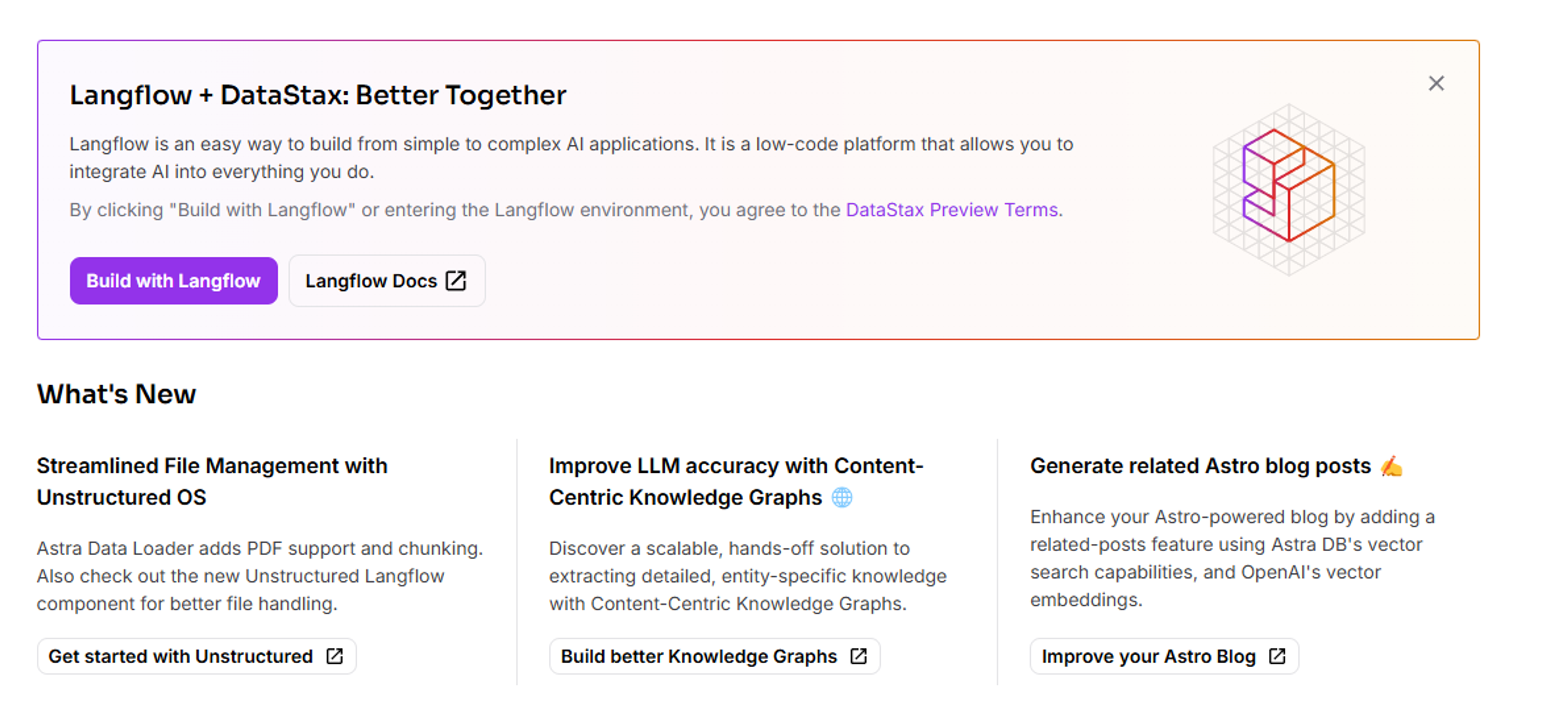

To build a Langflow app today, you can create a free account on DataStax. DataStax provides a complete GenAI application development platform that includes Langflow and Astra DB, an ultra-fast, scalable serverless vector store for RAG data.

After creating an account, you can select the Build with Langflow button to make your first Langflow application.

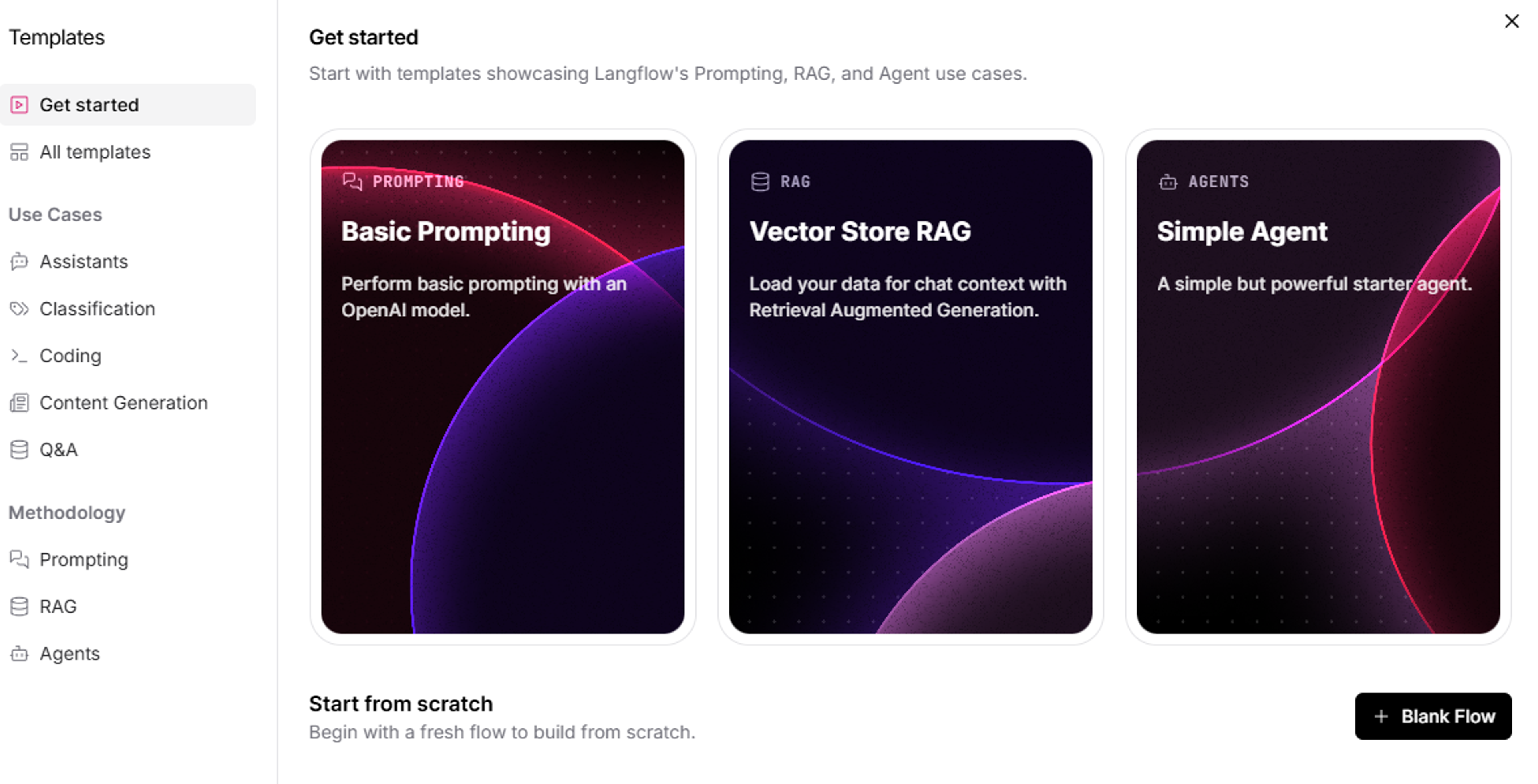

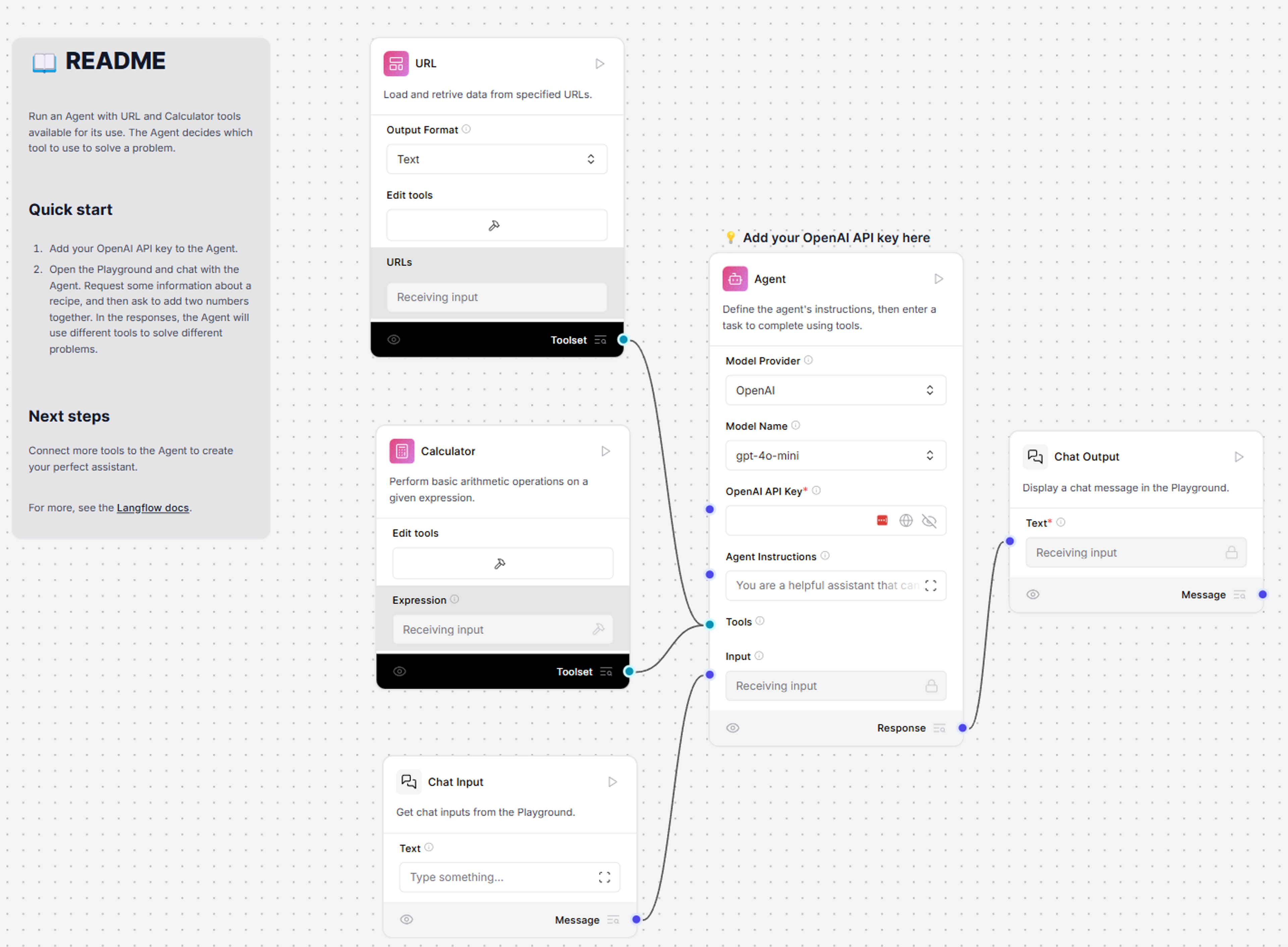

Once loaded, you can select the New Flow button to create a new Langflow application. For this article, start by selecting the Simple Agent template, which will build a full, working agent for you.

You'll see the Langflow editing screen with several components already laid out and configured:

By default, a Langflow agent will have a minimum of three components:

- An input accepting data. Usually, this is a chat input - a question froman agent's user.

- An output displaying the result.

- An agent that performs some work.

In this case, the agent also uses two other toolsets: A URL toolset and a Calculator toolset. These are configured with tool mode enabled, which means the agent can dynamically call them as secondary agents that assist in processing a specific request. In this case, the agent will determine what task you want to perform as part of your request (fetch a URL or perform a mathematical operation) and will call the appropriate tool.

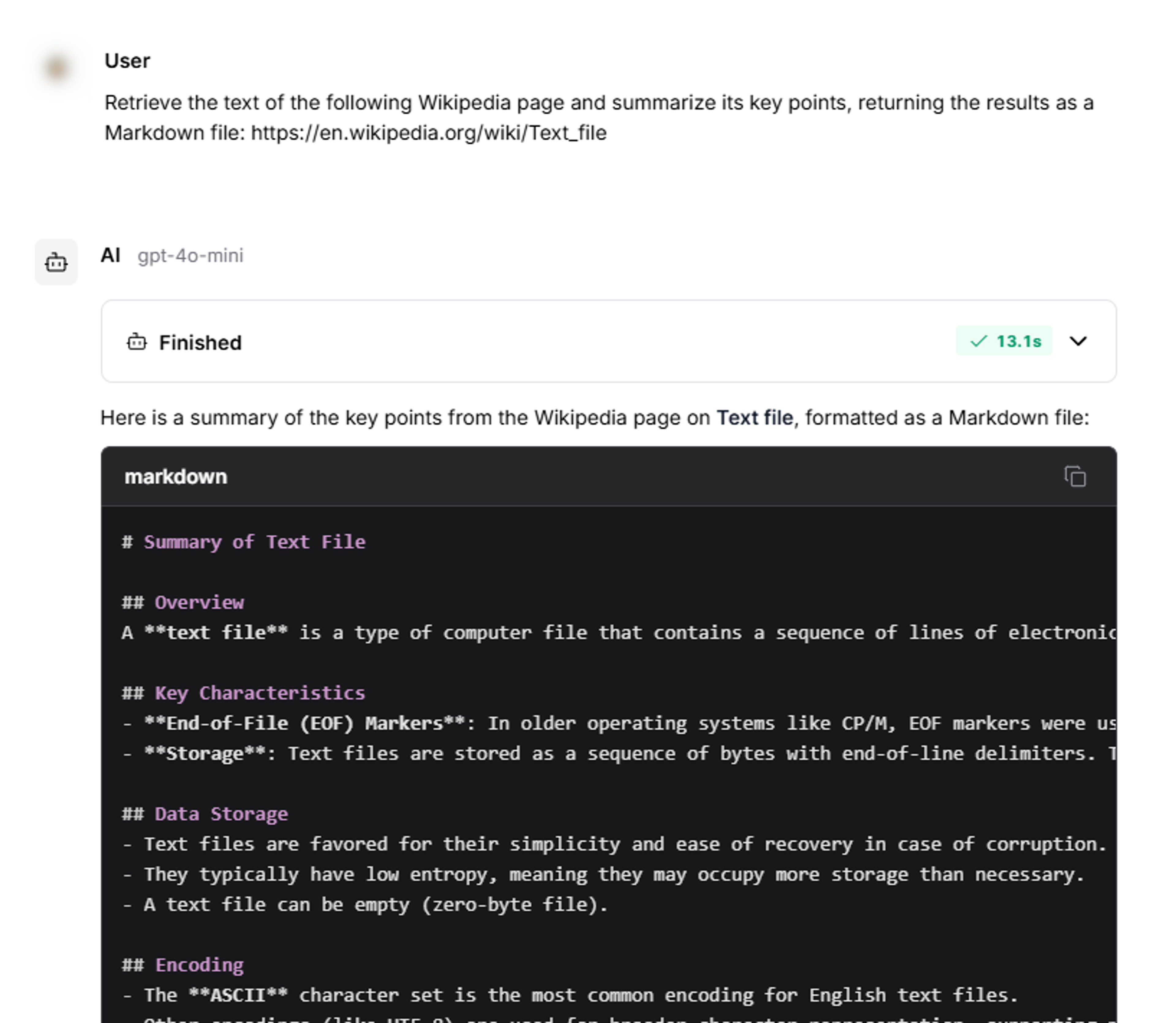

To try this out, obtain an OpenAI API key and add it to the agent's OpenAI API Key field. Then, test it out by opening the Playground (run icon in the upper right corner) and asking it questions. For example, you can ask it to summarize a URL and return the summary in Markdown format:

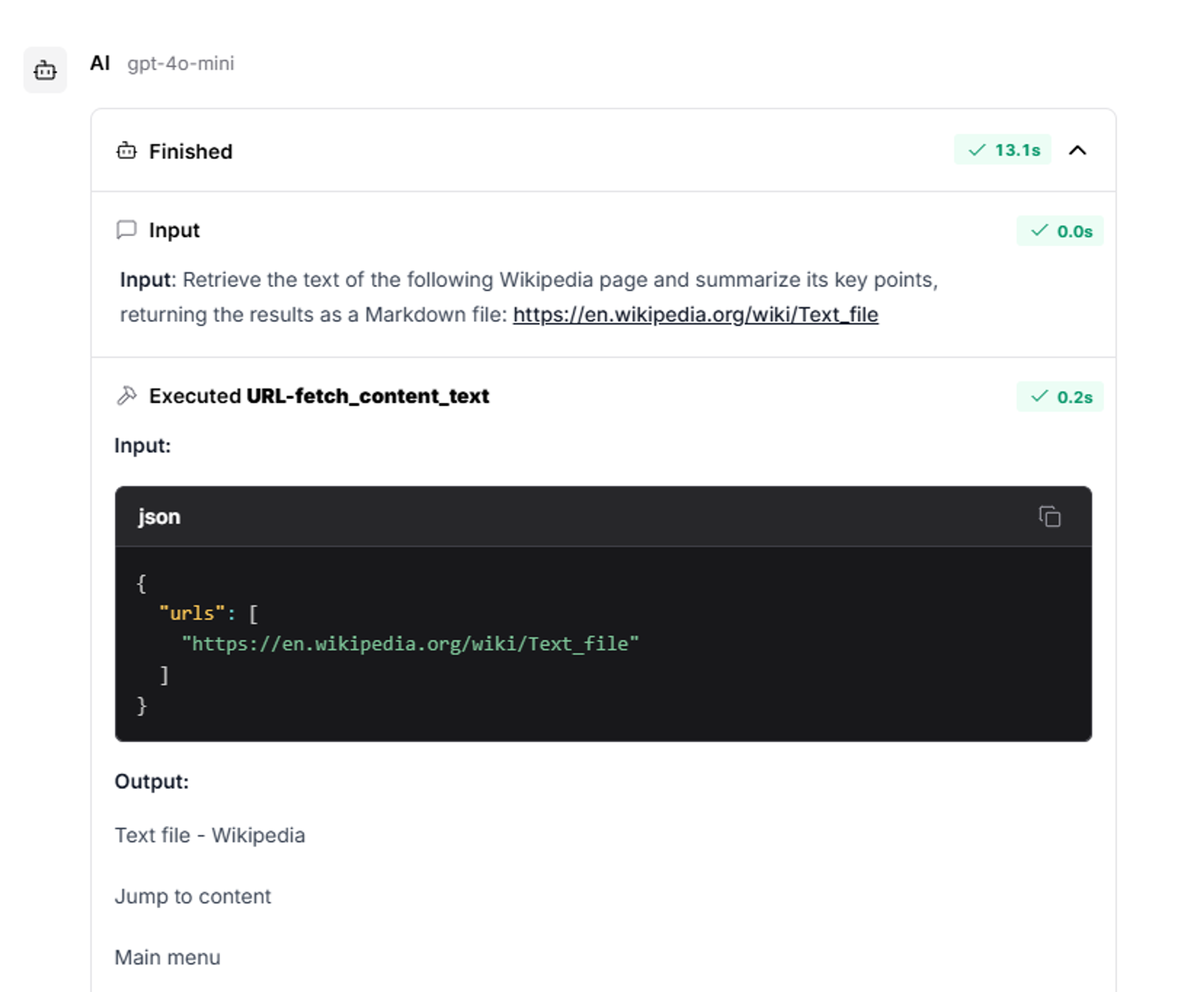

Now how the agent analyzed the request to understand it had to use the URL tool to fetch the remote document. If you expand the arrow next to Finished, the Playground will show you the steps it took to respond to your request:

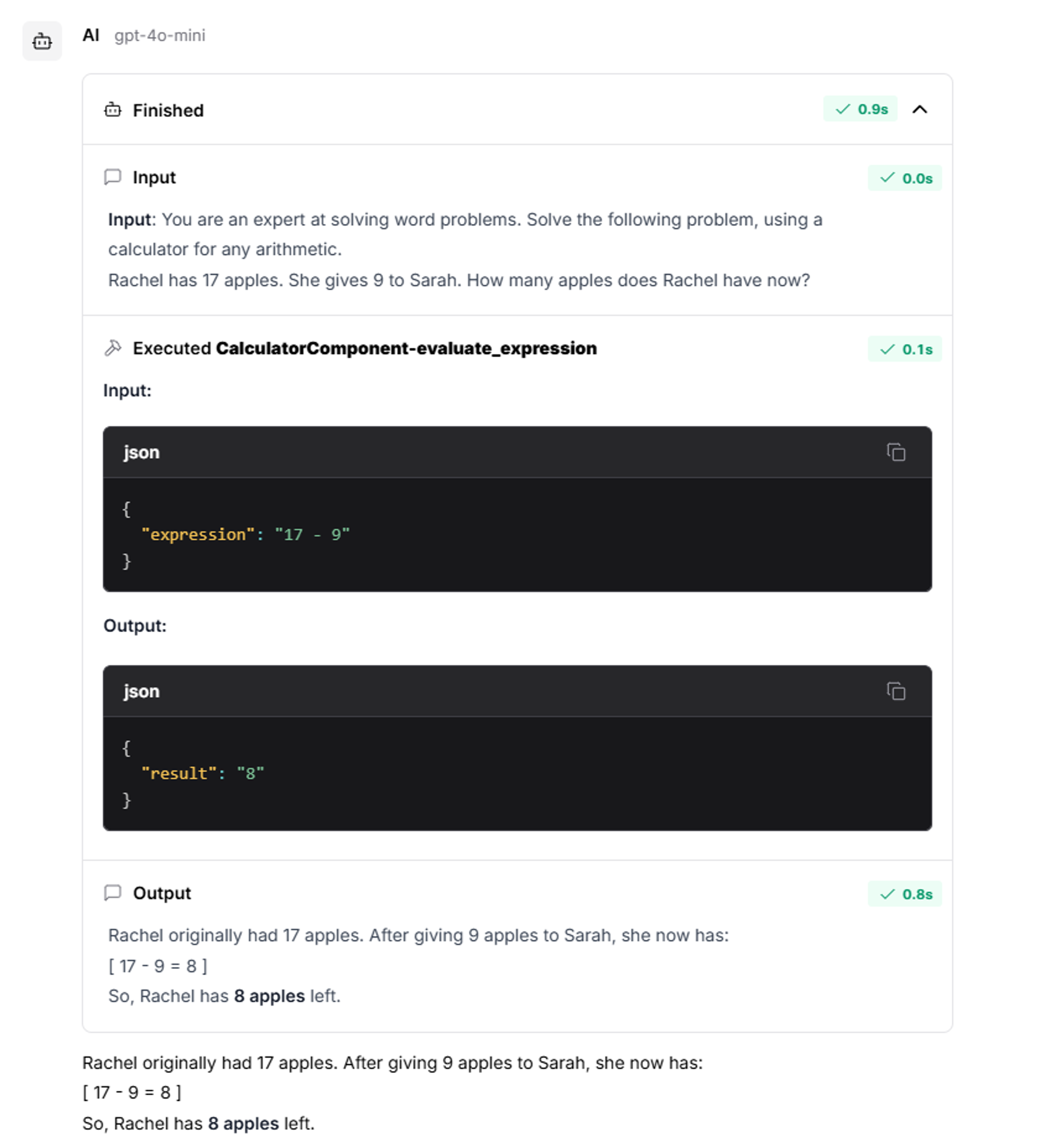

Conversely, if we give the agent a word problem, it can tell from the prompt that it needs to invoke a calculator for any math operations:

Conclusion

Using agentic workflows, you can create complex, self-correcting workflows that react dynamically to changing conditions. Tools like Langflow enable visually composing, testing, and deploying complex GenAI apps from pre-built components, cutting development time down from weeks to days.