LangChain is designed to simplify AI development. For example, LangChain provides pre-built libraries for popular LLMs (like OpenAI GPT), so all you need to develop with the model is your credentials and your prompts. They don’t need to worry about any of OpenAI’s API specifics like endpoints, protocols, and authentication.

But LLM interaction is just the beginning of what LangChain can help with.

Why is LangChain important?

AI applications usually follow certain steps to process data. For instance, when you click to view an item on an e-commerce website, that click event is sent to the website and is processed through an AI application to decide on other “suggested items” that should be shown on the page. The application is given the context of what the item being viewed is, what’s in your cart, and the previous things you have viewed and shown interest in. All that data would be given to an LLM to decide on other items you might be interested in.

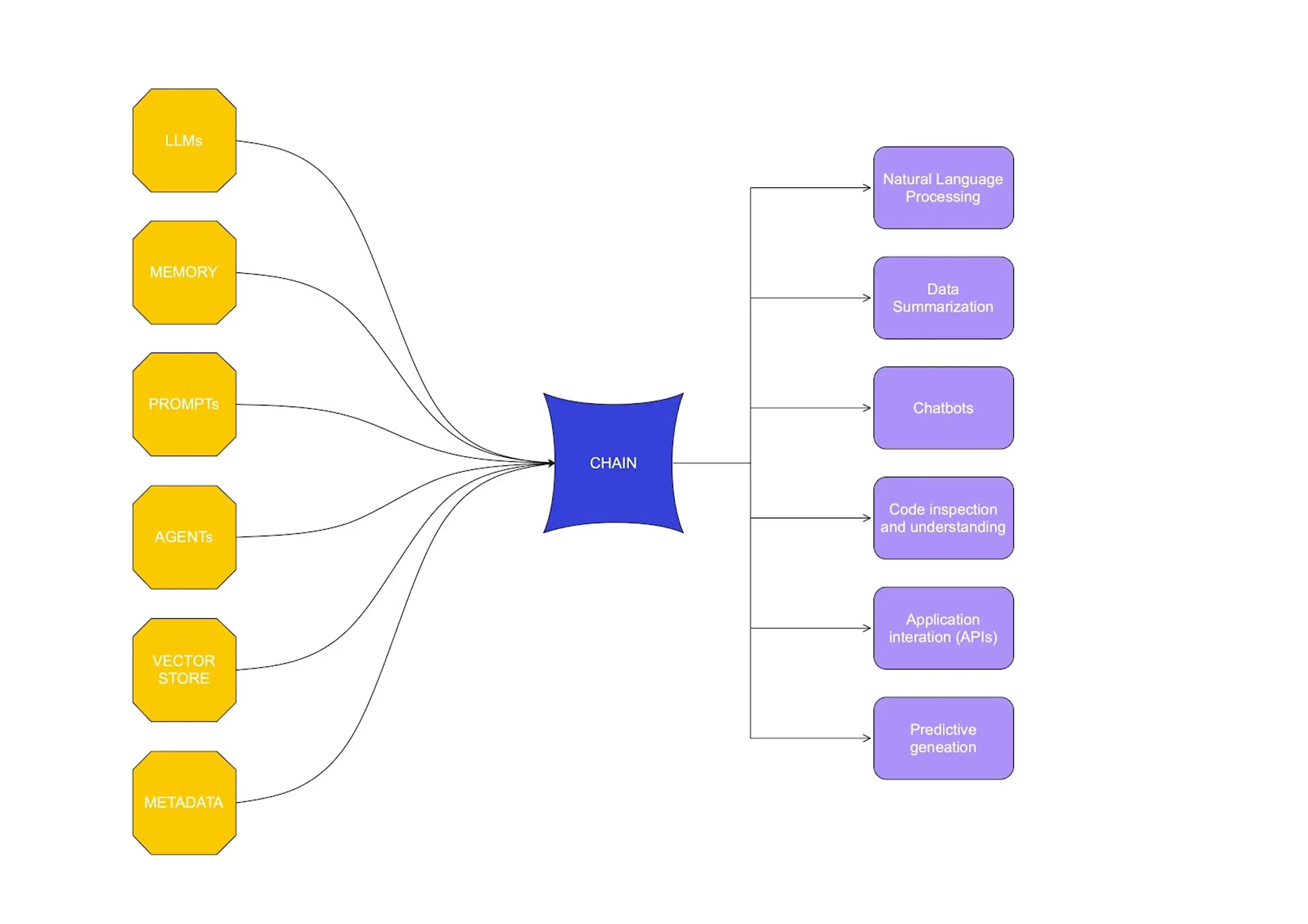

High-Level View of LangChain

When you’re building an application like this, the steps in the pipeline choreograph what services should be included, how they will be “fed” the data, and what “shape” the data will take. As you can imagine, these complex actions require APIs, data structure, network access, security, and much more.

LangChain offers an innovative framework for integrating various language models and tools. This integration enables the construction of more complex and sophisticated AI applications.

By leveraging LangChain, developers can create AI systems capable of understanding, reasoning, and interacting in ways that closely mimic human communication. This capability is crucial for applications requiring a deep comprehension of language and context.

LangChain can enhance the efficiency, accuracy, and contextual relevance of AI interactions in customer service, content creation, data analysis, and more. Its ability to bridge the communication gap between humans and machines opens up new possibilities for integrating AI into everyday tasks and processes. LangChain makes AI more accessible, intuitive, and useful in practical applications.

What are the key components of LangChain?

LangChain is a sophisticated framework comprising several key components that work in synergy to enhance natural language processing tasks.

LLMs (large language models)

LLMs are the backbone of LangChain, providing the core capability for understanding and generating language. They are trained on vast datasets to produce coherent and contextually relevant text.

Prompt templates

Prompt templates in LangChain are designed to interact efficiently with LLMs. They structure the input to maximize the effectiveness of language models in understanding and responding to user queries.

Indexes

Indexes in LangChain serve as databases, organizing and storing information in a structured manner so relevant data is retrieved efficiently when the system processes language queries.

Retrievers

Retrievers work alongside indexes. Their function is to quickly fetch the relevant information from the indexes based on the user input query, ensuring that the response generation is informed and accurate.

Output parsers

Output parsers process the language generated by LLMs. They help refine the output into a format that is useful and relevant to the specific task.

Vector store

The vector store in LangChain handles the embedding of words or phrases into numerical vectors. This is crucial for tasks involving semantic analysis and understanding the nuances of language.

Agents

Agents in LangChain are the decision-making components. They determine the best course of action based on the input, context, development environment, and available resources within the system.

What are the main features of LangChain?

LangChain has three main features that support its streamlined AI development workflow.

Communicating with models

When building AI applications, you’ll need a way to communicate with a model. LangChain has focused explicitly on common model interactions and made it easy to build a complete solution. Learn more about models and which LLMs LangChain supports.

LangChain has a prompts library to help parameterize repeated prompt text. Prompts can have placeholders that the system fills in right before submitting to the LLM. Instead of doing an inefficient string replacement on the prompt, you provide LangChain with a map of each placeholder and its value, and it efficiently handles getting the prompt ready for completion.

Once the model has done its job and responded with the completion, the application will need to perform post-processing. Sometimes, this is a simple character cleanup, but often, the completion is included in a parameter of a class object. LangChain's output parsers make this quick work by offering a deep integration between how the LLM will respond and the application’s custom classes.

Data retrieval

A model is considered small or large based on the amount of data used during training. For example, LLMs like GPT have been trained on the entire public internet. Definitely a “large” amount. However, no matter how much data a model is trained with, the amount is fixed.

Retrieval-augmented generation (RAG) is an application design that can include new data as it appears. In RAG, the application first compares input data to other recent data to gain context. Then, that context is provided to the LLM in a prompt to generate an up-to-date response.

RAG isn’t as simple as making a quick query and continuing to interact with the LLM. That is why

LangChain offers libraries to aid in implementing RAG.

LangChain offers the “document” object to “normalize” data from multiple sources. Passing data into documents lets you bring together data from sources with different schemas. As a document, data can easily be passed between different chains in a structured way.

Data used by LLMs isn’t stored in typical relational databases; it is stored as vectors in a vector store. Most modern databases like Astra DB offer a vector option. There are also vector-specific databases purpose-built for storing vector embeddings.

LangChain offers integrations for all popular vector stores, including AstraDB. It also offers convenient features specific to vector search, like similarity search and similarity search by vector.

With LangChain, you can tune your vector searches with maximum marginal relevance search. This comes in handy when you want to get that context just right - not too much data and not too little data provided to the LLM.

Chat memory

When an AI application chats with an LLM, it needs to give the LLM some historical information (context) about what is being asked. Without context, the conversation will be dry and unproductive. In the previous data retrieval section, the RAG pattern was described as a way to add this context.

Sometimes, though, the context needs to be in the moment. When you are chatting with your friend chances are you are not taking notes, trying to remember every word that was said. The same goes for a chat with the LLM; the model should track what has been said in the conversation.

LangChain offers a memory library where the application can save chat history to a fast-lookup store. This store is usually a NoSQL or in-memory store. Retrieving a chat’s history quickly and injecting it into the LLM’s prompt is a powerful way of creating intelligent contextual applications.

How does LangChain work?

The “chain” in LangChain refers to combining AI actions to create a processing pipeline. Each action, or chain, is a necessary step in the pipeline to complete the overall goal.

For example, a RAG system starts with the user submitting a question. An embedding is created from that text; a lookup is done on a vector database to gain more context about the question. Then, a prompt is created using both the original question and the context from the retrieval. The prompt is submitted to the LLM, which responds with an intelligent completion of the prompt.

All the steps in the RAG pattern need to happen in succession. If there are any errors along the way, the processing should stop. LangChain offers the “chain” construct to attach steps in a specific way with a specific configuration. All LangChain libraries follow this chain construct to make rearranging steps easy while still building powerful processing pipelines.

What are the benefits of using LangChain?

Using LangChain offers several benefits for natural language processing.

Enhanced language understanding and generation

LangChain integrates various language models, vector databases, and tools for more sophisticated language processing. This leads to better understanding and generation of human-like language, especially in complex tasks.

Customization and flexibility

LangChain’s modular structure offers significant customization. This adaptability makes it suitable for many applications, including content creation, customer service, artificial intelligence, and data analytics.

Streamlined development process

LangChain's framework simplifies the development of language processing systems. Chaining different components reduces complexity and accelerates the creation of advanced applications.

Improved efficiency and accuracy

Using LangChain in language tasks enhances efficiency and accuracy. The framework's ability to combine multiple language processing components leads to quicker and more accurate outcomes.

Examples of LangChain applications

LangChain has been purpose-built to facilitate several different types of GenAI applications.

Retrieval-augmented generation (RAG)

LangChain offers template scenarios for implementing RAG.

- A simple question/answer example adds context to a prompt.

- A RAG over code example that aids developers while writing code.

- A Chat History example creates a very intelligent chat experience with the LLM.

Visit LangChain’s tutorials for more.

Chatbots

Chatbots are a fundamental application of LLMs, showcasing the practical implementation of AI-driven conversations. LangChain’s getting started example helps you create an AI application in just a few minutes.

Synthetic data generation

LangChain's synthetic data generation example uses an LLM to generate data artificially based on a prompt. You can then use such data to run unit tests, load tests, and more.

Interacting with a database using natural language

LangChain's SQL example uses an LLM to transform a natural language question into an SQL query. Tools like this make it easy to develop SQL queries that account for all the intuitive specifics of the question at hand.

Getting started with LangChain

To build a python app using LangChain, first, you need to add the LangChain library to the requirements.

To interact with an LLM, you need to install its supporting library. View the full list of LLMs here.

Once those two steps are done, you’re ready to start building.

You could create a PromptTemplate + LLM + OutputParser or you could create a simple Prompt template application. Read more about interaction with models, data retrieval, chains, and memory in LangChain documentation.

Discover how DataStax and LangChain work better together

DataStax provides the real-time vector data and RAG capabilities that GenAI apps need. Seamlessly integrate with stacks of your choice (RAG, LangChain, LlamaIndex, OpenAI, GCP, AWS, Azure, etc).

To get started with LangChain and to see how DataStax and LangChain are better together, check out “Build LLM-Powered Applications Faster with LangChain Templates and Astra DB.”

Find more information on how to set up and deploy Astra DB on one of the world's highest-performing vector stores, built on Apache Cassandra, designed for handling massive volumes of data at scale.