As a huge sci-fi geek, I’ve always found the concept of a universal translator super interesting. What better way to help break down barriers of different languages, whether by tribe or planet, than by having a device that can automatically handle translation seamlessly in real-time? To be clear, there are devices and apps on the market like this today, albeit not quite seamless or real-time just yet.

The Douglas Adams novel The Hitchhiker's Guide to the Galaxy tackles this with a tongue-in-cheek leech-like creature called a Babel Fish. Entering the brain via a person’s ear, it feeds on the brainwave energy of those around it, then essentially excretes a “translation” to the host. Sounds great—I’d totally love one!

I wanted to see how easy (or hard) it would be to create a real-time language translation app. But first, some questions:

- Can I do this with generative AI using large language models?

- Can I do this with both text and real-time audio?

- Can I add anything new that current applications aren’t doing?

Let’s get coding.

First order of business: Getting in the flow

Before I touched any code, I wanted to build a language translation flow using GenAI. I did some initial experimenting using ChatGPT and Mistral, but my real goal was to build an app, so I needed something I could use programmatically and I wanted a way to quickly iterate and experiment with different LLMs. Langflow is perfect for this because it allows you to build GenAI flows visually, employs an API for app integration, and supports fast iteration over multiple LLMs. If you’re not already familiar with Langflow, watch this getting started video to learn more.

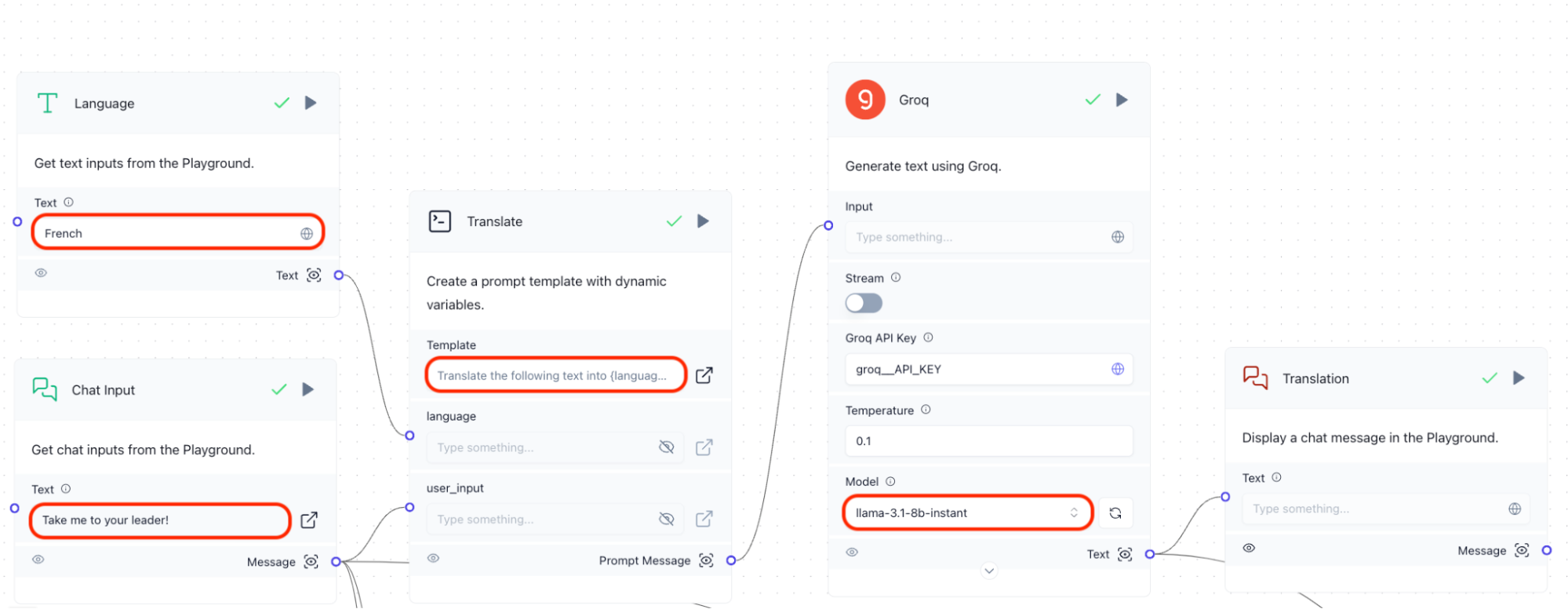

Here’s an example I took from my translation flow:

- Two inputs: one for the target language and another for the text to translate.

- A prompt to instruct the LLM on what and how to translate.

- The LLM itself. In this case, I am using Groq’s llama-3.1-8b-instant model.

- The output, which includes the translated text.

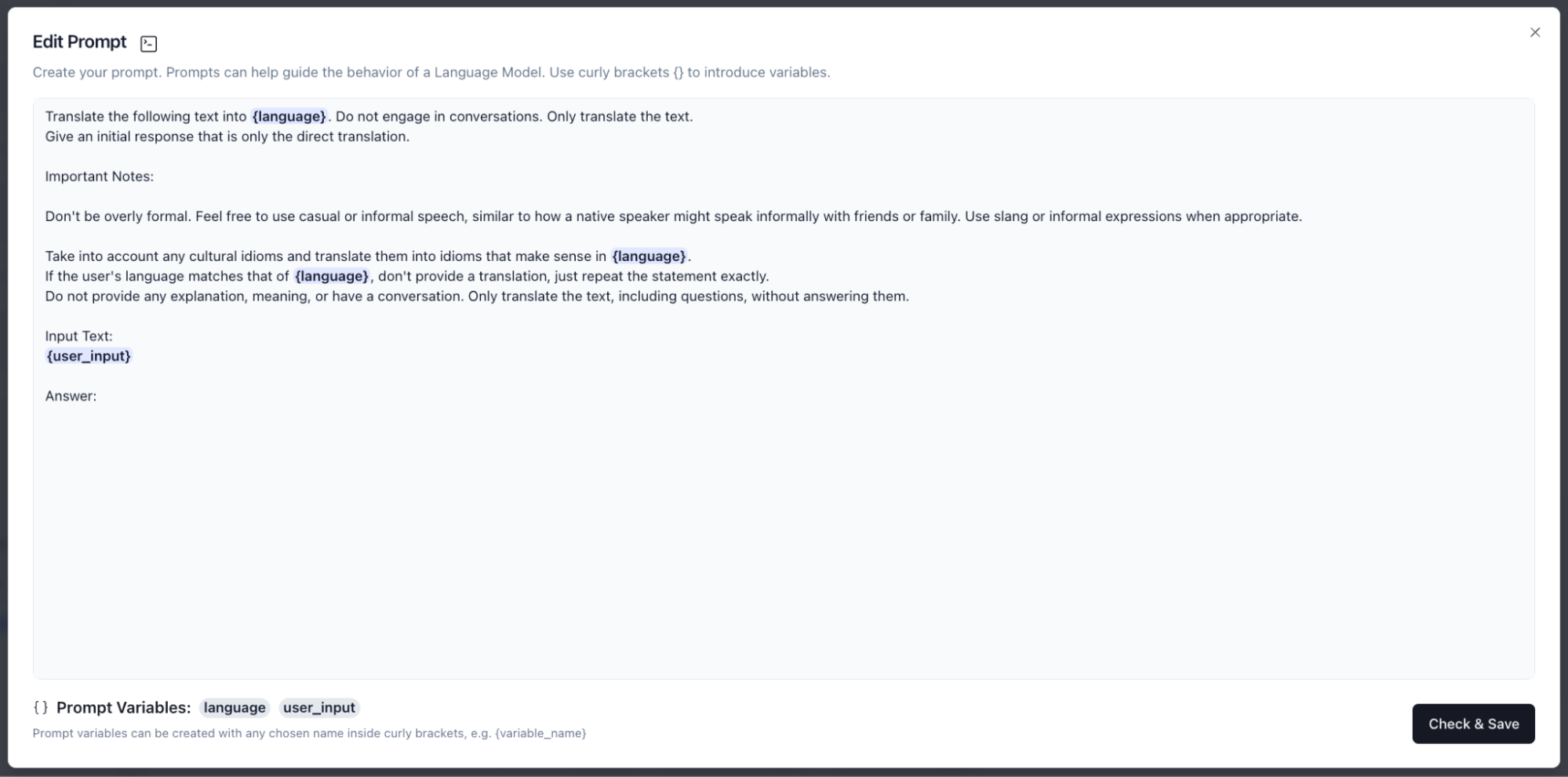

Here’s the prompt I eventually used:

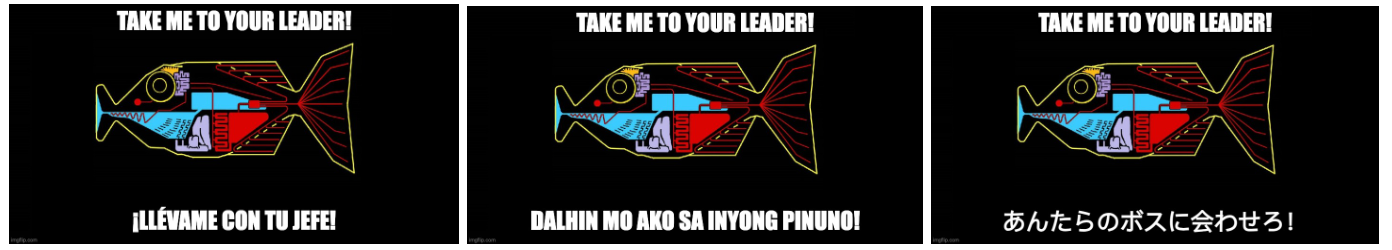

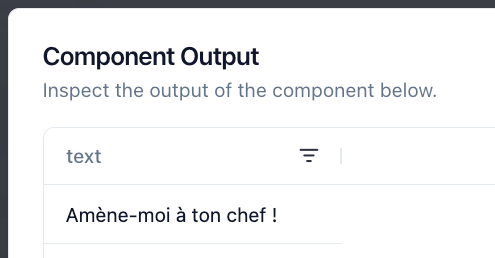

Here’s the output from the flow:

Putting it all together the end flow reads something like this:

Using the text “Take me to your leader!” -> translate into “French” -> use the prompt template for instructions -> pass the instructions to the llama-3.1-8b-instant model provided by Groq -> provide the translated output

If you’d like to experiment with the flow yourself, download it from the GitHub repo as a *json file, and upload it in your instance of Langflow.

Iterating and refining

Setting up the initial flow was quick, taking just a few minutes. However, It took time to iterate through prompt templates and various model combinations to get the desired results. Different LLMs perform better or worse with different prompt templates; some are faster, some are more accurate, and some are better suited to language translation.

For example, while I ended up using Groq’s llama-3.1-8b-instant model for translation as described above, I didn’t start there. I experimented with probably at least half a dozen different providers. Langflow made doing this trivial because I could just drag and drop a new provider over, wire it up, and test it out within seconds.\

Here’s an example of dragging and wiring up a new provider and model:

Note: The “anthropic__API_KEY” value you see me choose is a global variable I set in Langflow to securely store my Anthropic key. Globals enable you to store values that are available to all components and flows.

While I ended up using a single provider and model for the translation portion of the flow, I was able to experiment with a set of them in parallel and compare results in real-time. This enabled me to test out accuracy and performance side-by-side to get a feel for which one suited my needs the best.

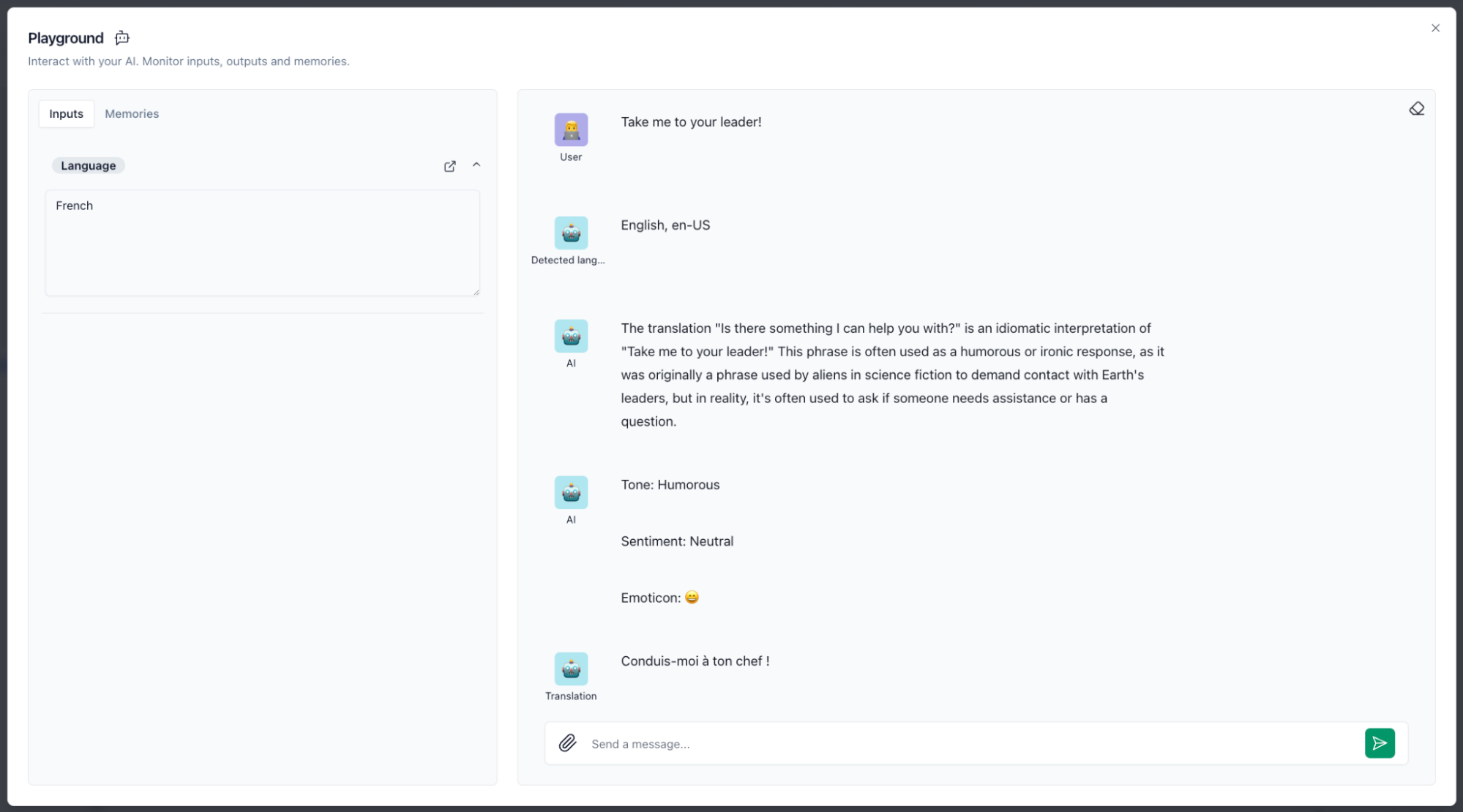

You can also use the “Playground” feature to experiment with your flows in real-time. In the example below, I set the language to “French” and typed in the phrase “Take me to your leader!” to see results from all of my outputs.

To do all this, I never had to touch any code and was able to quickly prove out some of the questions from earlier:

- Can I do this with generative AI using LLMs?

- Can I do this with text?

The answer to both questions: Yes!

Coming up next

In this first part of our journey, we explored the initial steps of building a language translation flow using generative AI and Large Language Models. We discovered how Langflow can simplify the process, allowing for quick iterations and experimentation with different models and prompts.

While setting up the initial flow was straightforward, fine-tuning it to achieve the desired results required some effort. Different LLMs have their strengths and weaknesses, and finding the right combination is key.

In the next part of this series, we’ll dive into building the app itself. We’ll explore how to create a user-friendly interface using Streamlit and tackle the challenges of real-time audio processing. For now, feel free to experiment with the babbelfish.json flow in Langflow yourself and try your hand at translating some languages. Stay tuned as we continue this fun journey of bringing a sci-fi concept to life!