As you adopt AI practices, one of the more challenging aspects of getting data AI-ready is “chunking.” Chunking is, as the name suggests, breaking down large blocks of text into smaller segments. Each chunk is then vectorized, stored, and indexed. Any type of data can be chunked: text documents, images, sound files. For clarity, we’ll stick to text chunking, but the theory and process is the same for any type of data.

Chunking is a necessary step in vectorizing data for many reasons. Smaller chunks of data use less memory, accelerate retrieval times, enable parallel processing of data, and allow scaling of the database. Breaking content down into smaller parts can improve the relevance of content that’s retrieved from a vector database, as well. Retrieved chunks are passed into the prompt of the large language model (LLM). When used as part of retrieval-augmented generation (RAG), chunking helps control costs as fewer, more relevant objects can be passed to the LLM.

As Maria Khalusova, a developer advocate at DataStax partner Unstructured, wrote in a recent blog post: “Chunking is one of the essential preprocessing steps in any RAG system. The choices you make when you set it up will influence the retrieval quality, and as a consequence, the overall performance of the system.”

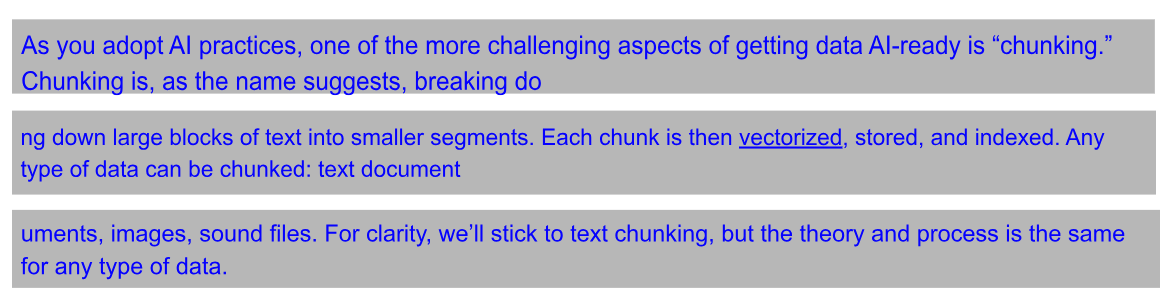

An example of chunking text

Let’s look at an example. Conveniently, this blog post is made of text, so rather than pull in something from the outside world, let’s take the first paragraph of the post. For the record, that paragraph has 72 words, 422 total characters, and 350 characters excluding spaces. Let’s set the standard chunking parameters as follows:

chunkSize: 150,

chunkOverlap: 5,

This will tell my processing model to create chunks of 150 characters with a five character overlap. So breaking down the paragraph above will result in chunks that look like this:

Each of the resulting chunks would be stored in the database as an embedded vector and indexed. The chunkOverlap setting defines how many characters from the previous and next chunk are included. You see the value of overlap between the first and second chunk, where ‘do’ is split in chunk 1 while the full word ‘down’, rather than just ‘wn’, is maintained in chunk 2. Overlap helps provide semantic integrity for searches by preserving context that might otherwise be lost.

Chunking enables LLMs to process large amounts of data in small, meaningful units that fit with the LLM’s memory and still preserve enough semantic content to allow the LLM to process and "understand" the content and meaning.

Insert token, please

While vector embedded chunks are how the data is stored and retrieved, that’s not actually how it’s fed to the LLM. LLMs consume data, and charge by tokens. Tokens are the smallest amount of data an LLM ingests. A token is roughly four characters excluding spaces and some control characters; token size is dependent on the LLM provider, but generally equals ¾ of a word.

LLMs analyze tokens to determine how semantically related they are. The chunks above, using the TikTokenzer, work out to 34, 33, and 32 tokens respectively. Different LLMs have different limits on the number of tokens they can process: the context window. Controlling chunk size can help ensure your data will fit into your LLM’s context window. Processing more relevant data increases relevancy of the results and helps reduce hallucinations.

What’s the best way to chunk data?

There is no definitive chunking strategy that suits every application. A number of factors play into choosing a chunking strategy:

- Data size - The size of the source data needs to be considered. Is the data being imported from: books? IMs? Blogs? If the chunk size is too small, the semantic relationships between the words can be broken up and lost. If the chunk size is too large and contains too much data, the results may get watered down with extraneous information not actually relevant to the query.

- Query complexity - The types of queries expected to be used against the data informs the chunk size as well. Are the queries going to be short and specific? Smaller chunks help identify specific relationships without additional noise. More complex queries requiring more comprehensive results benefit from larger chunk sizes to identify broad context in the data. Focusing and aligning the expected query size with chunking helps to optimize results.

- LLM considerations - Each LLM has unique properties for handling queries and data. Each LLM has a defined context window. Tailoring chunk size to the specific LLM being used can increase relevancy by ensuring sufficient data is retrieved to process the query. It can also reduce or increase LLM costs by changing the number of tokens processed.

- Text splitters - There are a variety of different text splitters available for use, each processing text differently. Some splitters use simple character count, as described above, while others allow you to manipulate the text, split at defined characters, and remove spaces and other unwanted characters. Other splitters offer support for specific scripting or programming languages, tokens, or even semantic splitters that attempt to make semantic connections for words and sentences, retaining context within the chunk.

Choosing a chunking strategy

The conventional wisdom on determining the best chunking strategy for your application is trial and error. Experiment with different chunking and overlap settings on your data and find a solution that works for you. If this is the approach for you, Langflow is the tool to use to save time and effort. With Langflow, you can experiment with different LLMs, different chunking splitters, and different chunking settings. Using real data, you can easily test different strategies, iterating through combinations until you find one that best fits the needs of your application.

If you’re an Astra DB user, there’s an easier way. For Astra DB users, we recommend a chunk size of 1024 and an overlap setting of 128. This is an excellent starting point and should suit many generative AI applications. While some experimentation and tuning might be desirable, Astra DB users can start quickly with these settings and still use Langflow for any additional experimentation required to get the best results for their application.

Whatever approach you decide to take, it’s important to understand that chunking impacts not only the performance of the application and relevance of query results, but also the cost of running it. Finding the optimal balance between efficiency and cost will ensure your application can scale without degrading performance or breaking the bank.

If you’re ready to dive deeper into chunking and get a deeper understanding of the options and tradeoff in different chunking strategies, check out this post from Unstructured, “Chunking for RAG: best practices.”