Supporting streaming in your AI applications can increase their responsiveness and interactivity. However, implementing streaming can present some challenges.

Here, we’ll discuss the benefits of streaming and show how streaming LangChain simplifies its implementation. We’ll also see how Langflow, a visual tool for LangChain, makes adding streaming support to your AI apps even easier.

Use cases for streaming with large language models

Many AI applications make calls to large language models (LLMs) in real-time on behalf of users. For example, a chat bot may call an LLM to answer customer support questions. The query would use a combination of the LLM’s foundational data and generative AI capabilities, along with contextual data specific to the user’s problem domain, to form an answer to the query.

By default, the app must wait for the LLM to produce a full response before it returns anything to the user. This can cause user frustration, as the LLM may take several seconds to respond. (Most data shows that users only consider an app “responsive” if it returns a result between 0.1 and 1 second after performing an action.)

When you enable streaming, you can receive an LLM’s response in chunks, as the AI generates it. You can return these chunks to the user after performing validation and error checking. This increases responsiveness and also breeds familiarity; it gives the user the feeling that a human, not a machine, is typing a response to their question.

Streaming LangChain: The basics

LLMs pack a lot of built-in functionality into a simple API call. Despite this, GenAI app builders still have to write a lot of supporting code themselves. That includes request/response handlers for each LLM they support, as well as validation, error checking, exception handling, scaling, parallelization, and other operational tasks.

This is where frameworks like LangChain come in.

Note: Langchain streaming is distinct from streaming for event-driven architectures, microservices, and messaging. The streaming that we’re discussing here is built as an alternative to batch interaction with LLMs and is specific to LangChain.

LangChain simplifies building production-ready GenAI applications on top of LLMs. It provides a number of out-of-the-box components to support AI apps - including support for critical features such as retrieval augmented generation (RAG) - that app devs can string together using the LangChain Expression Language (LCEL). This makes building GenAI apps as simple as piping output between Unix commands.

One of the convenient features that LangChain supports is streaming LLM responses. A LangChain component can support streaming by implementing the Runnable interface. Several LangChain components support Runnable, including:

- Parsers - Take the output from an LLM and parses it into its final format

- Prompts - Format LLM inputs to guide generation of responses

- Retrievers - Pull application-specific data from documents, vector stores, and embedding models

- Agents - Choose which decision to make next based on high-level directives

Using LangChain, you can easily enable streaming support for an LLM, streaming sentences or words to the user as the LLM generates them. You can also specify that LangChain stream the intermediate steps an agent takes when producing output. That’s useful for examining how the agent reached a specific conclusion and from where it pulled its final data.

Benefits and challenges of streaming LangChain

As noted, streaming provides a more immersive experience to users, which increases AI app satisfaction and improves adoption. Using streaming LangChain, developers can turn this functionality on easily without building and managing their own streaming capability. This decreases app dev time as well as code complexity.

Streaming LangChain also makes it easier to ship a scalable app. LangChain’s streaming implementation can support a high volume of simultaneous LLM transactions.

Even when using LangChain, however, LLM streaming can present a few challenges. A key challenge is that streaming works best when used on non-structured responses (e.g., chat-like output). It takes more care to implement with structured responses, like a JSON or YAML-formatted response, as these generally require the entire response to validate fully. (However, as we’ll discuss below, streaming LangChain also makes these complicated situations easier to implement.)

In addition, using streaming LangChain doesn’t mean you don’t have to think about issues like performance. You can still encounter latency, especially when handling a large volume of requests or responses. You can manage these issues by optimizing your streaming logic and implementing techniques such as batch requests to minimize your LLM requests.

The basics of streaming LangChain

You can take one of two approaches to implementing LangChain. The default approach is to use either the stream or astream methods on a Runnable object. (astream is the asynchronous version of stream.) For example, you could perform asynchronous streaming of responses from Claude’s Anthropic using the async method as follows:

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplatefrom langchain_anthropic import ChatAnthropic

model = ChatAnthropic()

prompt = ChatPromptTemplate.from_template("tell me a joke about {topic}")

parser = StrOutputParser()

chain = prompt | model | parser

async for chunk in chain.astream({"topic": "parrot"}):

print(chunk, end="|", flush=True)This example uses LCEL to chain three components - a prompt, a model (Anthropic), and a parser in sequence. The code then calls astream on the final result to read and print the answer in a loop as Anthropic is generating it.

For structured text, like JSON, you can use these methods in conjunction with the JsonOutputParser object in LangChain to auto-complete responses into a valid document as they stream in. This can be slightly more complicated, as it requires supplying a generator function to fill in missing parts of the response if the chain contains any steps that operate on finalized inputs.

Another approach is to use the astream_events and astream_log methods to read the output as an event stream. This gives you not just the final output but access to the intermediate steps taken by the model:

events = [

event

async for event in chain.astream_events(

'output a list of the countries france, spain and japan and their populations in JSON format. Use a dict with an outer key of "countries" which contains a list of countries. Each country should have the key `name` and `population`',

version="v1",

)

]This will return streaming events from both the model and the parser in real time, giving you visibility into how each component of the chain constructed the final response.

Implement streaming LangChain with Langflow

Streaming LangChain is even easier to implement if you use Langflow to build your LangChain apps. Langflow is a visual Integrated Development Environment (IDE) for building LangChain-based applications. Using Langflow, you can create a new GenAI app quickly from a template and customize it to your liking, adding and removing components via a simple UX.

Using Langflow, you can round-trip between code and the IDE. Langflow makes the easy things easy without making the hard things impossible.

Langflow is available via open-source. DataStax also implements Langflow as a service as part of our one-stop AI platform, making it easy to build secure, compliant, and scalable GenAI apps with RAG support.

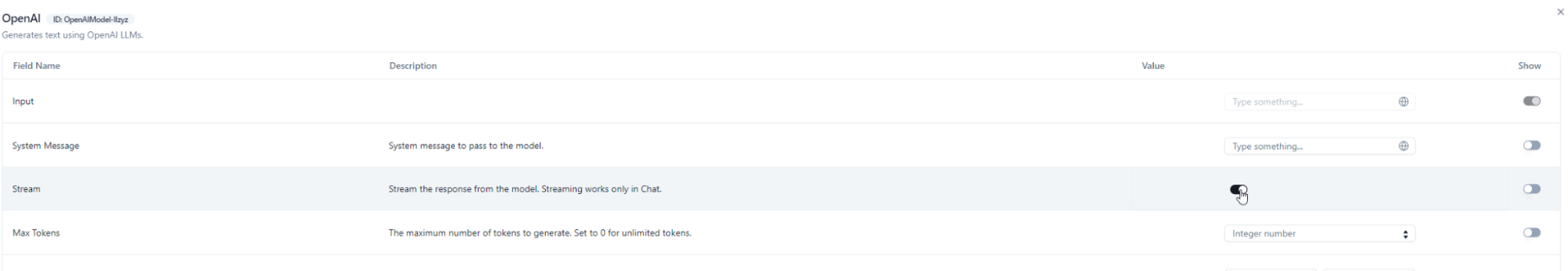

Enabling streaming using Langflow is extremely easy. In your chat-based workflow, you select your LLM component (OpenAI is shown below) and then select Advanced Settings.

Then, in Advanced Settings, you toggle the stream setting to On.

Then, in Advanced Settings, you toggle the stream setting to On.

And that’s it! The app will now stream responses to any queries made through its front-end chat interface.

Build more responsive streaming LangChain apps

An LLM is a critical component of a GenAI app - but not the only one. LLMs give you general language generation capabilities and a generalized knowledge based on years of historical data. You still need to supplement them with your own domain-specific context.

DataStax fills this gap by making it easier to build RAG-enabled LLM apps faster and more easily than ever. Using DataStax, you can store domain-specific context in Astra DB, our high performance vector database.

DataStax supports building apps on top of Astra DB using LangChain. The ease of use of LangChain combined with Astra DB comprises a fully integrated stack for developing production-grade Gen AI applications. Using Langflow makes building RAG-powered apps with Astra DB and LangChain even easier.

Want to try it out? Sign up for a DataStax account today.